In this project I implement an evolutionary model, built using genetic algorithms, that plays my first Python project. If you haven't seen it yet, you can play it here.

It uses a system that "evolves" its way of playing, inspired by how nature improves species over generations. At the beginning, the program creates a bunch of players with no knowledge of the environment, who are rewarded for achieving high scores and told they can move up or down.

Initially, they simply press these keys randomly, until, within that chaos, one manages to dodge the first wall. This allows it to score higher than the rest, and that small "success" is passed on to the next generation. The cycle repeats over and over. As the process converges, they begin to discover that dodging walls and collecting stars leads to higher scores.

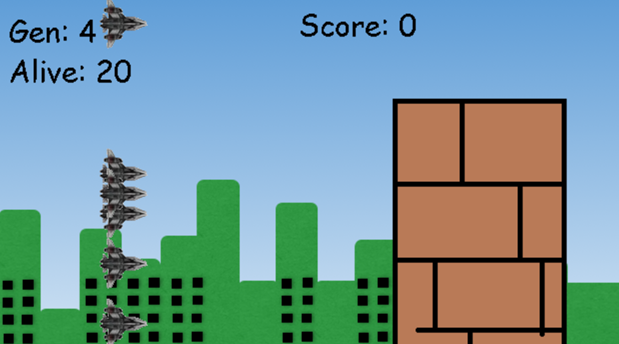

Below you can watch in real-time how this genetic algorithm is trained. Each generation starts with 20 individuals; when all die, a new generation is created by combining the best genes from the previous one along with some random mutations, and the process starts again. You can try playing against the bot—the longer you let it train, the better it will be.

After the simulator, I leave the more technical details for those who are interested.

Genetic Algorithms

Genetic algorithms are artificial intelligence techniques based on the principles of biological evolution. They work through iterative selection of candidate solutions, favoring those with better performance.

The process consists of the following stages:

- Initial population: Multiple individuals are generated with random parameters (neural networks with randomly initialized weights and connections)

- Evaluation: Each individual executes the task and accumulates a score (fitness) based on its performance

- Selection: Individuals with higher fitness are selected as parents

- Reproduction and mutation: New individuals are created by combining characteristics from the parents and applying random modifications

- Iteration: The cycle repeats, progressively improving the population's performance

This approach allows finding complex solutions without the need to explicitly program the desired behavior. Unlike traditional supervised learning, where a labeled dataset is required, genetic algorithms discover solutions through heuristic search in the space of possible configurations.

NEAT: NeuroEvolution of Augmenting Topologies

NEAT (NeuroEvolution of Augmenting Topologies) is a specialized genetic algorithm that simultaneously evolves both the weights and the structure of neural networks. Unlike conventional methods where the architecture is fixed, NEAT starts from minimal networks that become more complex according to the problem's needs.

Main characteristics of NEAT:

- Incremental complexification: Starts with networks without hidden layers (minimal topology), adding neurons and connections through structural mutations. This approach solves the evolutionary bootstrapping problem, where initially complex structures hinder convergence

- Speciation: Groups similar individuals into species using a compatibility coefficient that measures topological and weight differences. This protects structural innovations that may initially reduce fitness, allowing their refinement before competing with established solutions

- Historical innovation markers: Uses unique identifiers (innovation numbers) to perform coherent genetic crossovers between different topologies, solving the alignment problem in crossover of networks with different structures

This approach allows the network's complexity to emerge naturally during training, avoiding both the overfitting of excessively complex architectures and the underfitting of overly simple structures.

Implemented Genetic Operators

The algorithm applies two main types of mutations:

Weight mutations (80% probability):

- Gaussian perturbation of existing connections (±0.5 of current value)

- Complete weight replacement (10% of mutations)

- Allowed range: [-30, 30] to avoid extreme values that destabilize learning

Structural mutations:

- Connection addition (50% probability): Creates links between previously disconnected neurons

- Node addition (20% probability): Splits an existing connection by inserting an intermediate neuron

- Component deletion (50% and 20% respectively): Keeps the population diverse without unnecessary complexity

The configuration uses the tanh activation function, which transforms input values to a bounded range between -1 and 1. This introduces the non-linearity necessary for the network to adjust its behavior flexibly.

Speciation and Diversity

The algorithm maintains compatibility threshold = 3.0, where the genetic distance between two individuals is calculated as:

δ = c₁·(E/N) + c₂·W̄

Where:

- E: Disjoint/excess genes (structural differences)

- W̄: Average weight difference in matching genes

- c₁ = 1.0, c₂ = 0.5: Coefficients that prioritize structural differences over weight variations

- N: Normalization by the larger genome size

Species with stagnation > 20 generations (without fitness improvement) are eliminated, except for the 2 best species (species elitism). This balances exploration of new solutions and exploitation of promising strategies.

Project Implementation

The game consists of an endless runner where a spaceship must dodge procedural obstacles (upper and lower columns) while collecting power-ups. The objective is to train an agent using NEAT that learns to maximize survival.

Input/Output Architecture

The neural network processes 5 normalized input variables:

- Rocket's vertical position (0-300 pixels): Agent's current state

- Horizontal distance to next obstacle (0-1024 pixels): Critical temporal information

- Obstacle's vertical position (-200 or 100 pixels): Discriminates between upper/lower threat

- Horizontal distance to next power-up (0-3072 pixels): Reward opportunity

- Power-up's vertical position (50-250 pixels): Spatial location of the secondary objective

Generates 2 outputs that determine the action through threshold activations (>0.5):

- Output 0: Upward vertical displacement (10 pixels/frame)

- Output 1: Downward vertical displacement (10 pixels/frame)

- Both <0.5: Maintain position (updates internal timers)

This representation faces the temporal credit assignment problem: how to attribute success/failure to actions taken several frames before the event? NEAT solves this implicitly through cumulative fitness.

Multi-Objective Fitness Function

The evaluation combines three components that reflect different aspects of optimal behavior:

Base survival (0.1 points/frame):

- Rewards exploration and risk avoidance

- At 30 FPS, equals 3 points/second of survival

- Implicitly penalizes passive strategies through termination upon collision

Power-up collection (10 points/unit):

- Incentivizes active searching behavior

- Requires balance between survival and risk-taking

- Power-ups appear with 30% probability after each obstacle

Obstacle clearance (1 point/unit):

- Temporal progress metric

- Obstacles appear approximately every 400 pixels

- Correlates directly with survival time

The trade-off between these objectives emerges naturally: maximizing power-ups implies risky maneuvers, while avoiding obstacles favors conservative trajectories.

Difficulty Scaling

The system implements three modes that modify the scenario scroll speed:

- Easy (15 px/frame): Reaction window of ~40 frames for an obstacle at typical distance

- Normal (25 px/frame): Window of ~24 frames, requires anticipation

- Hard (35 px/frame): Window of ~17 frames, demands near-instantaneous responses

This variation fundamentally alters the fitness landscape: higher speeds penalize reactive strategies and favor predictive policies based on distance information. The difficulty increase is not linear due to the game's physics (acceleration limits, gap sizes, etc.).

Training Strategies

Each generation evaluates 20 genomes in parallel for a maximum of 50 generations, with:

- Elitism = 2: The two best individuals from each species pass directly to the next generation without mutation

- Survival threshold = 20%: Only the top quintile of each species reproduces

- Fitness limit = 1000 points: Early termination criterion (approx. 300 seconds of perfect survival)

Training typically converges in no more than 10 generations.